ref: Wei et al. (2022)

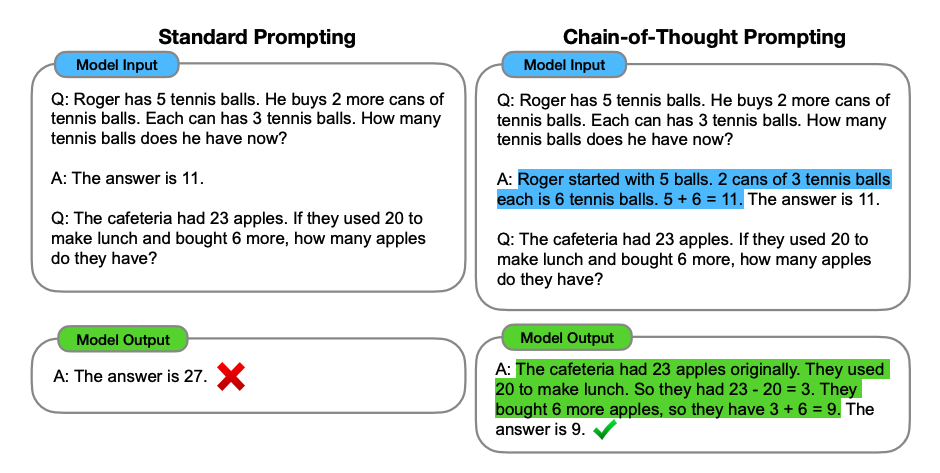

從圖中可以看到,原本是推理不出答案的。但藉由告訴 GPT 如何推倒,他也能得出最終答案。也就是你透過推理的方式讓 GPT 掌握推理邏輯,藉此提供準確率。我自己理解後覺得很像我們在學習建構數學,要一步步教他邏輯的建立並堆疊上去。

chain-of-thought (CoT) prompting enables complex reasoning capabilities through intermediate reasoning steps. You can combine it with few-shot prompting to get better results on more complex tasks that require reasoning before responding